OpenAI’s Codex variant of GPT-5.2 points to a future where software doesn’t just get written faster—it actively maintains and defends itself.

Why This Story Exists Now

As GPT-5 enters production environments in early 2026, most public attention remains fixed on surface improvements: reasoning benchmarks, multimodal polish, and general assistant upgrades. That focus misses a quieter but more consequential shift happening inside OpenAI’s developer tooling.

The release of GPT-5.2-Codex marks a transition away from AI as a code generator toward AI as a long-running software agent—one capable of monitoring, repairing, and stabilizing complex systems over extended periods of time.

This matters now because modern software rarely fails at the moment of creation. It fails later—through dependency drift, security gaps, and unattended edge cases. GPT-5.2-Codex is designed for that phase of the lifecycle.

Information Gain

What readers learn here—and will not find clearly explained in most coverage—is how GPT-5.2-Codex enables long-duration, autonomous software maintenance rather than short-burst code generation.

Three technical behaviors define this shift:

- Native Context Compaction

GPT-5.2-Codex can sustain 24-hour autonomous sessions, maintaining logical coherence across very large repositories. Benchmarks and early enterprise testing indicate it can handle refactors lasting 7+ hours without human intervention—a sharp increase from the ~30-minute degradation limits typical in 2025 models. - Live Knowledge via the OpenAI Web Layer (OWL)

When deployed in agent configurations, Codex can reference current third-party documentation rather than relying solely on training-era assumptions. This allows it to adapt to API changes, SDK deprecations, and security advisories in near-real time. - Verified Long-Horizon Performance

In January 2026 disclosures, GPT-5.2-Codex reached 56.4% on SWE-Bench Pro, a benchmark specifically designed to measure real-world software engineering over extended tasks—not single-file puzzles.

Together, these behaviors explain why Codex is being evaluated not as a productivity tool, but as maintenance infrastructure.

The Content Gap

Mainstream GPT-5 coverage largely treats Codex as a faster or more accurate way to write code. Even developer-focused reporting rarely moves beyond feature lists.

What is missing is analysis of defensive and operational use cases:

- Incident containment

- Dependency drift mitigation

- Overnight vulnerability response

Most articles ask, “Can it write better code?”

This article answers, “Can it keep production systems stable when no one is watching?”

That distinction reflects how software actually fails—and how value is created in mature systems.

Deep Analysis

From Assistant to Agent: Why Context Compaction Changes Everything

Earlier models handled large projects by expanding context windows, a brute-force approach that degraded as irrelevant tokens accumulated. GPT-5.2-Codex exhibits native context compaction, compressing low-signal elements such as boilerplate and imports while preserving active logic at full resolution.

The result is continuity:

- Multi-file reasoning without regressions

- Persistent awareness of architectural intent

- Reduced “forgotten assumptions” during long sessions

This is the technical prerequisite for autonomous agents that operate for hours—or days—without collapse.

Self-Healing Through Live Documentation (OWL)

Many real-world failures are caused not by bad logic, but by environmental change:

- API version bumps

- Authentication updates

- Deprecated methods

Through the OpenAI Web Layer, Codex agents can verify current documentation when explicitly enabled, allowing proposed fixes to reflect present-day reality, not historical training data.

This capability underpins what teams increasingly describe as self-healing software: systems that adapt fast enough to reduce downtime before humans intervene.

Defensive Cyber: Closing the Zero-Day Gap

Shadow Patching as an Emergent Pattern (Analysis)

Security teams face a persistent problem: the zero-day gap between vulnerability discovery and human response. GPT-5.2-Codex is being tested as a way to shrink that window.

One emerging workflow involves “shadow patches”—temporary mitigations generated and tested in sandboxed environments. These are not permanent fixes, but stopgaps designed to:

- Add validation layers

- Disable risky features

- Rate-limit attack vectors

In late December 2025, Codex-assisted analysis contributed to identifying multiple critical React vulnerabilities, according to industry reporting. While humans remained responsible for disclosure and remediation, AI-assisted discovery accelerated the response timeline.

The value here is not autonomy, but time bought under pressure.

Winner vs Loser Breakdown

| Group | Likely Outcome |

| Small Engineering Teams | Gain continuous defensive coverage without proportional hiring |

| Enterprise Security Ops | Faster containment, lower mean-time-to-resolution |

| Legacy Static Scanners | Lose relevance without agent integration |

| Manual QA Roles | Shift toward oversight, validation, and policy enforcement |

Strategic Impact

Business Model Implications

As AI systems assume maintenance responsibilities, value shifts from developer hours to system resilience. Pricing models increasingly resemble infrastructure subscriptions rather than productivity tools.

Platform Shift

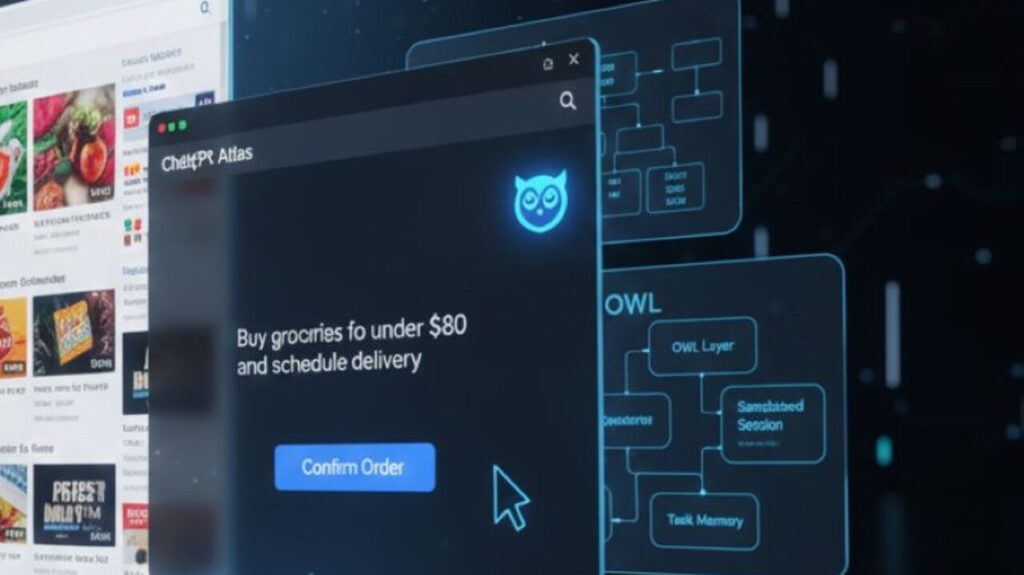

GPT-5.2-Codex reinforces OpenAI’s broader move—also visible in its browser and monetization strategies—toward becoming a foundational layer, not a destination app.

(See: ChatGPT Atlas vs Chrome and OpenAI’s 2026 Ads Pivot on TechPlus Trends.)

Why This Matters (Second-Order Effects)

Jobs:

Developers move toward architectural judgment and oversight rather than routine fixes.

Creators:

Open-source maintainers may rely on agents to manage issues and patches, reshaping contribution dynamics.

Users:

Fewer outages and faster recoveries, often without visible human action.

Industry Power Shifts:

Control over agent platforms increasingly determines who governs the software lifecycle.

Regulatory / Antitrust / Policy Angle

Autonomous code modification raises unresolved questions:

- Liability for AI-generated mitigations

- Auditability of machine decisions

- Limits on unattended operation in critical infrastructure

These issues are likely to surface as Codex-style agents move from pilots into regulated environments.

What Happens Next

Short-Term (2026):

- Restricted execution and sandboxing as defaults

- Human approval checkpoints

- Defensive use cases outpacing creative ones

Long-Term:

- AI-managed codebases with periodic audits

- Standards for autonomous maintenance

- New categories of software resilience tooling

What to Do Now: Running GPT-5.2-Codex Safely on Windows

For Windows-based teams exploring Codex agents:

- Enable WSL 2

Linux subsystems provide better isolation and stability for long-running sessions. - Use Codex CLI or VS Code Codex Extension

These tools support agent workflows and restricted execution. - Configure High-Reasoning Mode Carefully

In configuration files (e.g., config.toml), teams are setting:- model = “gpt-5.2-xhigh”

- approval_policy = “on-request”

- Adopt a Zero-Trust Posture

Limit file access, restrict network domains, and require explicit approval for destructive actions.

The goal is autonomy with containment, not blind delegation.

Related article:

| OpenAI 2026 Pivot & Gumdrop Pen | tpt.li/gpt-ads |

| Foxconn Supply Chain | tpt.li/openai-supply |

| ChatGPT Atlas vs. Google Chrome | tpt.li/atlas-browser |

| $1B Disney x OpenAI Sora Deal | tpt.li/disney-sora |

FAQ

1.Is GPT-5.2-Codex the same as ChatGPT?

Ans-No. Codex is optimized for software engineering and agent workflows.

2.Can it run unattended for 24 hours?

Ans-Yes, in controlled environments, with safeguards.

3.Does this replace human engineers?

Ans-No. It shifts their role toward oversight and strategy.

4.Is shadow patching production-safe?

Ans-Only as a temporary mitigation, never a final fix.

5.Why does SWE-Bench Pro matter more than coding demos?

Ans-It measures real, long-horizon software tasks.

Final Takeaway

GPT-5.2-Codex signals a shift from creation to care. Its importance lies not in writing more code, but in keeping existing systems alive, secure, and resilient. In 2026, the most valuable software may be the kind that quietly fixes itself.

Sources

- OpenAI research and developer documentation

- SWE-Bench Pro benchmark methodology

- Industry reporting on AI-assisted vulnerability discovery

- Internal: ChatGPT Atlas vs Chrome

- Internal: OpenAI’s 2026 Pivot Toward Ads

Author Bio

Saameer Go is the founder and editor of Tech Plus Trends. He covers artificial intelligence platforms, developer infrastructure, and the long-term implications of autonomous systems, with a focus on separating technical reality from hype.